MAKE YOUR ASSESSMENTS USEFUL WITH ITEM ANALYSIS

Effective assessments provide a window through which educators can gain visibility into their students’ learning gaps - they can get to know in which topics they are struggling, and why? and then use this insight to provide their students with constructive suggestions to improve their learning, or if necessary re-orient their way of teaching.

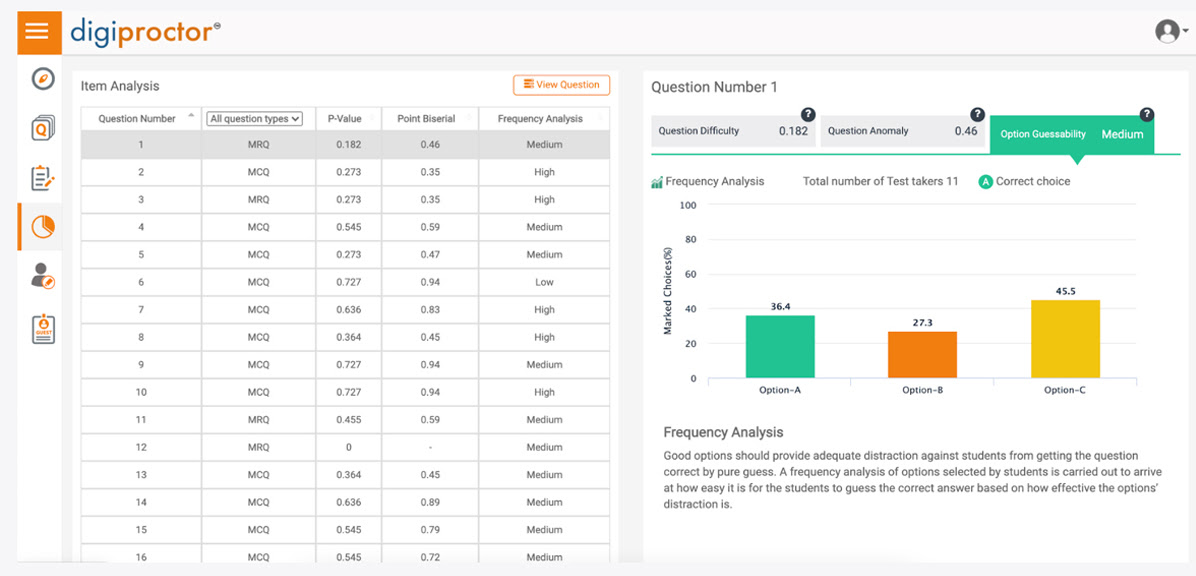

Item Analysis

While providing feedback to students based on their performance in assessments is essential for improving learning outcomes, it is also equally essential to design effective assessments, because effective assessments are the ones that enable precise and accurate feedback. One way to design effective assessments is to do an Item Analysis.

ITEM ANALYSIS

Item analysis involves analysing students' response to individual assessment questions(i.e.items). The students’ responses are interpreted to identify the questions' difficulty,guessability and anomaly,which helps differentiate students based on the level of knowledge they have in the subject.How well this differentiation is done indicates the exam quality and assessment effectiveness.

Question difficulty

Item analysis reveals the question’s difficulty. Knowing this is important because if the item is too difficult and everyone gets the item wrong, it will not be possible to tell which students know the topic of the question better. Similarly if everyone gets the item correct because it is too easy, it will not be possible to tell who knows the topic better.

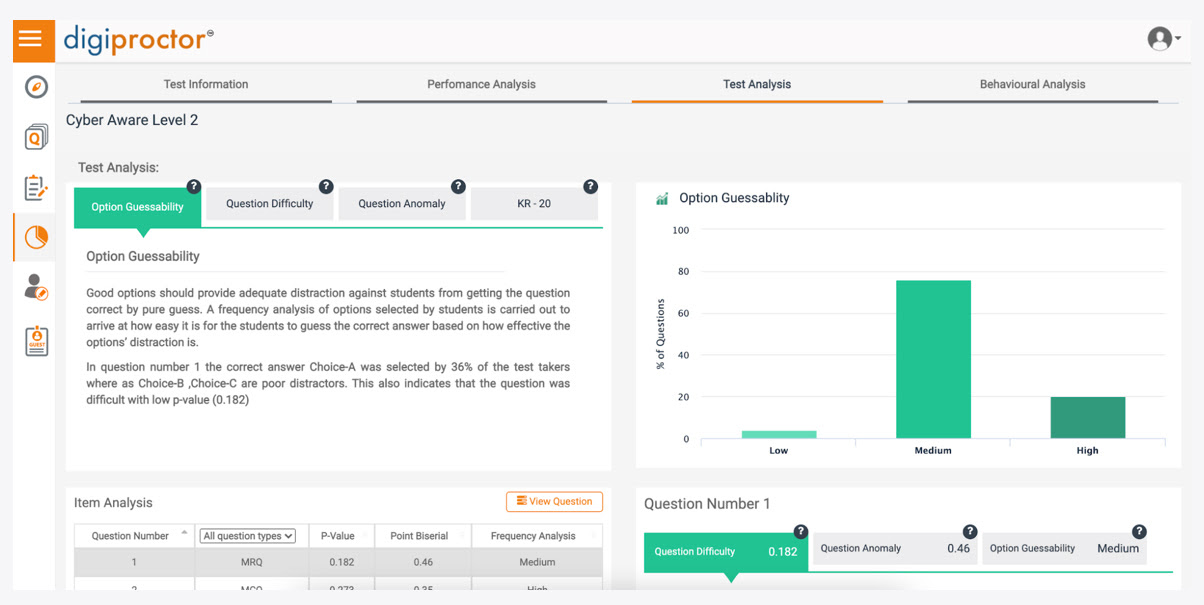

Question guessability

How easy is it for students to guess the right answer in multiple-choice exams? For example, if there are four options, and two of them are very obviously incorrect – it would render the question with just a 50/50 chance to guess the correct response. In multiple-choice questions the incorrect options should be effectively disguised to distract students away from the correct option.

Question anomaly

How effectively the item can discriminate between students with varying levels of knowledge. If more top scorers (students who have scored high overall) have got the item wrong compared to bottom scorers, there could be an anomaly in the question.

The challenge

Item analysis requires analysing student responses to individual questions and discovering patterns in them, which is difficult to do manually. Also, typically educators do not have the time or necessary bandwidth to do item analysis after every assessment. Thus, to be used practically, the item analysis needs automation and technology.

Digiproctor automates Item Analysis

Digiproctor automates the entire item analysis process converting it into a one-button-press activity. It formalizes item analysis into a standard method through which assessments can be improved.

Digiproctor presents the question difficulty as a P-value statistic (range: 0 to +1), question anomaly as a Point-biserial correlation (range: -1 to +1), and question guessability as a frequency.

Digiproctor automates item analysis covering:

- Question difficulty

- Question guessability

- Question anomaly

Question difficulty is presented as a P-Value statistic ranging between 0 and +1, with 0 being very difficult and +1 being very easy.

Question anomaly is presented as a Point-Biserial correlation statistic ranging between -1.0 to +1.0.

Items above 0.25 are good items.

And, as a rule of thumb, items below 0.1 could indicate either incorrect key or ambiguous content of question.

Question guessability is presented as a frequency of students who have chosen different options.